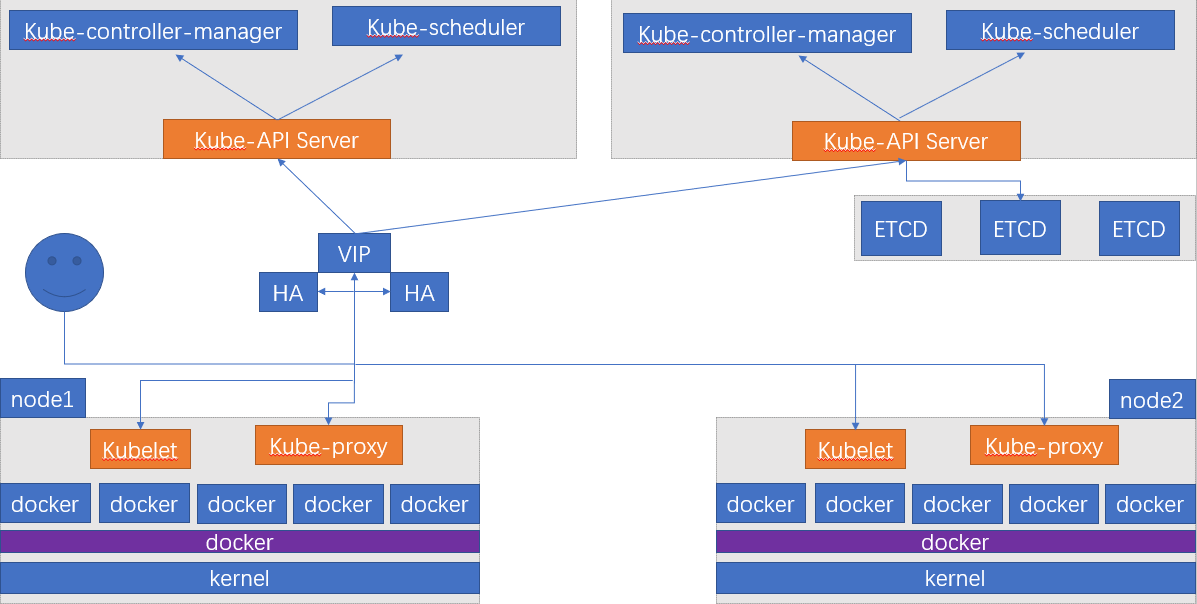

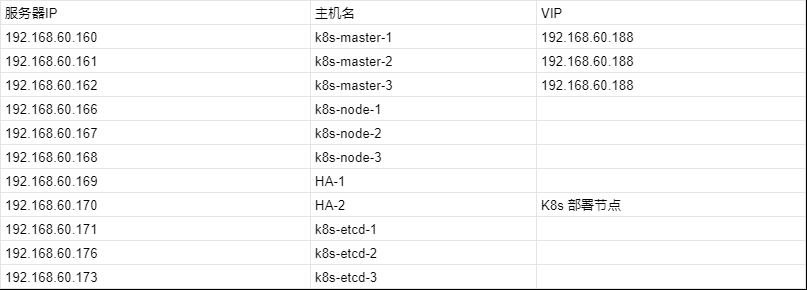

架构环境:

环境准备:

系统:Ubuntu 22.04 LTS

一、⾼可⽤负载均衡:

k8s⾼可⽤反向代理(两台HA都要操作):

安装keepalived haproxy

apt install keepalived haproxy -y配置keepalived

root@ha-1:~# find / -name keepalived*

/etc/init.d/keepalived

/etc/default/keepalived

/etc/systemd/system/multi-user.target.wants/keepalived.service

/etc/keepalived

/var/lib/dpkg/info/keepalived.prerm

/var/lib/dpkg/info/keepalived.list

/var/lib/dpkg/info/keepalived.conffiles

/var/lib/dpkg/info/keepalived.postinst

/var/lib/dpkg/info/keepalived.postrm

/var/lib/dpkg/info/keepalived.md5sums

/var/lib/systemd/deb-systemd-helper-enabled/multi-user.target.wants/keepalived.service

/var/lib/systemd/deb-systemd-helper-enabled/keepalived.service.dsh-also

/usr/share/doc/keepalived

/usr/share/doc/keepalived/keepalived.conf.SYNOPSIS

/usr/share/doc/keepalived/samples/keepalived.conf.vrrp.localcheck

/usr/share/doc/keepalived/samples/keepalived.conf.IPv6

/usr/share/doc/keepalived/samples/keepalived.conf.PING_CHECK

/usr/share/doc/keepalived/samples/keepalived.conf.vrrp.lvs_syncd

/usr/share/doc/keepalived/samples/keepalived.conf.track_interface

/usr/share/doc/keepalived/samples/keepalived.conf.vrrp.static_ipaddress

/usr/share/doc/keepalived/samples/keepalived.conf.conditional_conf

/usr/share/doc/keepalived/samples/keepalived.conf.inhibit

/usr/share/doc/keepalived/samples/keepalived.conf.vrrp.sync

/usr/share/doc/keepalived/samples/keepalived.conf.SMTP_CHECK

/usr/share/doc/keepalived/samples/keepalived.conf.vrrp.routes

/usr/share/doc/keepalived/samples/keepalived.conf.HTTP_GET.port

/usr/share/doc/keepalived/samples/keepalived.conf.sample

/usr/share/doc/keepalived/samples/keepalived.conf.status_code

/usr/share/doc/keepalived/samples/keepalived.conf.misc_check

/usr/share/doc/keepalived/samples/keepalived.conf.fwmark

/usr/share/doc/keepalived/samples/keepalived.conf.UDP_CHECK

/usr/share/doc/keepalived/samples/keepalived.conf.misc_check_arg

/usr/share/doc/keepalived/samples/keepalived.conf.vrrp.scripts

/usr/share/doc/keepalived/samples/keepalived.conf.vrrp #vrrp模板文件

/usr/share/doc/keepalived/samples/keepalived.conf.virtualhost

/usr/share/doc/keepalived/samples/keepalived.conf.SSL_GET

/usr/share/doc/keepalived/samples/keepalived.conf.vrrp.rules

/usr/share/doc/keepalived/samples/keepalived.conf.quorum

/usr/share/doc/keepalived/samples/keepalived.conf.virtual_server_group

/usr/share/man/man5/keepalived.conf.5.gz

/usr/share/man/man8/keepalived.8.gz

/usr/sbin/keepalived

/usr/lib/systemd/system/keepalived.service

/usr/lib/python3/dist-packages/sos/report/plugins/keepalived.py

/usr/lib/python3/dist-packages/sos/report/plugins/__pycache__/keepalived.cpython-310.pycCopy vrrp模板文件到keepalived家目录下

cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf修改keepalived配置文件HA1:

cat <<EOF> /etc/keepalived/keeplived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 { #定义这个VRRP实例的名称为“VI_1”

state MASTER #设置该实例的初始状态为MASTER

interface ens160 #将该实例绑定到名为“ens160”的网卡上

garp_master_delay 10 #指定设备在作为master时发送GARP报告的延迟时间(以秒为单位)。

smtp_alert #在状态转换(包括MASTER、BACKUP和FAULT)期间发送电子邮件通知。

virtual_router_id 51 #指定该VRRP实例的虚拟路由器ID。可取值范围为1-255。

priority 100 #指定该实例的优先级,当备用节点的优先级大于主节点时,备用节点将成为新的主节点。

advert_int 1 #VRRP组包间隔时间。

authentication { #对VRRP消息进行身份验证的选项。

auth_type PASS #VRRP通信采用密码认证方式。

auth_pass 1111 #为该VRRP实例设置密码。

}

virtual_ipaddress { #指定该VRRP实例的虚拟IP地址集合

192.168.60.188 dev ens160 label ens160:0

192.168.60.189 dev ens160 label ens160:1

192.168.60.190 dev ens160 label ens160:2

192.168.60.191 dev ens160 label ens160:3

}

}

EOF修改keepalived配置文件HA2:

cat <<EOF> /etc/keepalived/keeplived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP #设置初始化为BACKUP

interface ens160

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 80 #优先级一定要比MASTER低

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.60.188 dev ens160 label ens160:0

192.168.60.189 dev ens160 label ens160:1

192.168.60.190 dev ens160 label ens160:2

192.168.60.191 dev ens160 label ens160:3

}

}

EOF重启服务指令,使配置生效

systemctl restart keepalived && systemctl enable keepalived验证keepalived能否正常使用:

正常状态;

root@ha-1:~#ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a5:b2:2a brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.60.169/24 brd 192.168.60.255 scope global ens160

valid_lft forever preferred_lft forever

inet 192.168.60.188/32 scope global ens160:0

valid_lft forever preferred_lft forever

inet 192.168.60.189/32 scope global ens160:1

valid_lft forever preferred_lft forever

inet 192.168.60.190/32 scope global ens160:2

valid_lft forever preferred_lft forever

inet 192.168.60.191/32 scope global ens160:3

valid_lft forever preferred_lft forever

inet6 fda9:8b2a:a222:0:250:56ff:fea5:b22a/64 scope global mngtmpaddr noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea5:b22a/64 scope link

valid_lft forever preferred_lft forever

root@ha-2:~#ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a5:7b:bc brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.60.170/24 brd 192.168.60.255 scope global ens160

valid_lft forever preferred_lft forever

inet6 fda9:8b2a:a222:0:250:56ff:fea5:7bbc/64 scope global mngtmpaddr noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea5:7bbc/64 scope link

valid_lft forever preferred_lft forever验证:在主节点关闭keepalived服务;查看VIP地址是否转移到BACKUP节点

正常状态:

HA-1;

root@ha-1:~#ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a5:b2:2a brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.60.169/24 brd 192.168.60.255 scope global ens160

valid_lft forever preferred_lft forever

inet6 fda9:8b2a:a222:0:250:56ff:fea5:b22a/64 scope global mngtmpaddr noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea5:b22a/64 scope link

valid_lft forever preferred_lft forever

HA-2;

root@ha-2:~#ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a5:7b:bc brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.60.170/24 brd 192.168.60.255 scope global ens160

valid_lft forever preferred_lft forever

inet 192.168.60.188/32 scope global ens160:0

valid_lft forever preferred_lft forever

inet 192.168.60.189/32 scope global ens160:1

valid_lft forever preferred_lft forever

inet 192.168.60.190/32 scope global ens160:2

valid_lft forever preferred_lft forever

inet 192.168.60.191/32 scope global ens160:3

valid_lft forever preferred_lft forever

inet6 fda9:8b2a:a222:0:250:56ff:fea5:7bbc/64 scope global mngtmpaddr noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea5:7bbc/64 scope link

valid_lft forever preferred_lft forever配置haproxy:

添加一下配置到/etc/haproxy/haproxy.cfg中

HA-1

listen k8s-master-6443

bind 192.168.60.188:6443

mode tcp

server 192.168.60.160 192.168.60.160:6443 check inter 2000 fall 3 rise 5

server 192.168.60.161 192.168.60.161:6443 check inter 2000 fall 3 rise 5

server 192.168.60.162 192.168.60.162:6443 check inter 2000 fall 3 rise 5

HA-2

listen k8s-master-6443

bind 192.168.60.188:6443

mode tcp

server 192.168.60.160 192.168.60.160:6443 check inter 2000 fall 3 rise 5

server 192.168.60.161 192.168.60.161:6443 check inter 2000 fall 3 rise 5

server 192.168.60.162 192.168.60.162:6443 check inter 2000 fall 3 rise 5在第二台HA机器启动服务时会报以下错误:

root@HA2:~# haproxy -f /etc/haproxy/haproxy.cfg

[WARNING] (2689) : parsing [/etc/haproxy/haproxy.cfg:23] : 'option httplog' not usable with proxy 'k8s-master-6443' (needs 'mode http'). Falling back to 'option tcplog'.

[NOTICE] (2689) : haproxy version is 2.4.22-0ubuntu0.22.04.1

[NOTICE] (2689) : path to executable is /usr/sbin/haproxy

[ALERT] (2689) : Starting proxy k8s-master-6443: cannot bind socket (Cannot assign requested address) [192.168.60.188:6443]

[ALERT] (2689) : [haproxy.main()] Some protocols failed to start their listeners! Exiting.原因是因为linux机器内核默认情况下是只监听本机存在的IP地址,本机没有IP地址(192.168.60.188)无法监听,导致报错"Some protocols failed to start their listeners! Exitin"

解决方法:

echo "net.ipv4.ip_nonlocal_bind = 0" >> etc/sysctl.conf

sysctl -p

systemctl restart haproxy重启服务指令,使配置生效

systemctl restart haproxy && systemctl enable haproxy二、kubeasz部署⾼可⽤kubernetes:

kubeasz 致⼒于提供快速部署⾼可⽤k8s集群的⼯具, 同时也努⼒成为k8s实践、使⽤的参考书;基于⼆进制⽅式部署

和利⽤ansible-playbook实现⾃动化;既提供⼀键安装脚本, 也可以根据安装指南分步执⾏安装各个组件。

kubeasz 从每⼀个单独部件组装到完整的集群,提供最灵活的配置能⼒,⼏乎可以设置任何组件的任何参数;同时⼜

为集群创建预置⼀套运⾏良好的默认配置,甚⾄⾃动化创建适合⼤规模集群的BGP Route Reflector⽹络模式。

官方地址:

https://github.com/easzlab/kubeasz免秘钥登录配置:

apt install ansible python3 sshpass

ssh-keygen -t rsa-sha2-512 -b 4096

cat key-copy.sh

#!/bin/bash

IP="

192.168.60.160

192.168.60.161

192.168.60.162

192.168.60.166

192.168.60.167

192.168.60.168

192.168.60.170

192.168.60.171

192.168.60.176

192.168.60.173

"

REMOTE_PORT="22"

REMOTE_USER="root"

REMOTE_PASS="your-password"

for REMOTE_HOST in ${IP};do

REMOTE_CMD="echo ${REMOTE_HOST} is successfully!"

#添加⽬标远程主机的公钥

ssh-keyscan -p "${REMOTE_PORT}" "${REMOTE_HOST}" >> ~/.ssh/known_hosts

#通过sshpass配置免秘钥登录、并创建python3软连接

sshpass -p "${REMOTE_PASS}" ssh-copy-id "${REMOTE_USER}@${REMOTE_HOST}"

ssh ${REMOTE_HOST} ln -sv /usr/bin/python3 /usr/bin/python

echo ${REMOTE_HOST} 免秘钥配置完成!

done

sh key-copy.sh下载kubeasz项⽬及组件:

#官网有多个版本的ezdown,每个版本的ezdown支持不同版本的k8s,详情可到官网查看

wget https://github.com/easzlab/kubeasz/releases/download/3.6.0/ezdown

chmod +x ./ezdown

./ezdown -D下载好的二进制文件路径:

ll /etc/kubeasz/

drwxr-xr-x 4 root root 4096 Jun 29 08:05 ./

drwxrwxr-x 12 root root 4096 Jun 29 08:06 ../

-rwxr-xr-x 1 root root 59499254 Nov 8 2022 calicoctl*

-rwxr-xr-x 1 root root 14526840 Apr 1 23:05 cfssl*

-rwxr-xr-x 1 root root 11965624 Apr 1 23:05 cfssl-certinfo*

-rwxr-xr-x 1 root root 7860224 Apr 1 23:05 cfssljson*

-rwxr-xr-x 1 root root 1120896 Jan 10 09:48 chronyd*

-rwxr-xr-x 1 root root 75132928 Mar 21 07:30 cilium*

drwxr-xr-x 2 root root 4096 Apr 1 23:05 cni-bin/

-rwxr-xr-x 1 root root 39451064 Jun 29 08:01 containerd*

drwxr-xr-x 2 root root 4096 Apr 1 23:05 containerd-bin/

-rwxr-xr-x 1 root root 7548928 Jun 29 08:01 containerd-shim*

-rwxr-xr-x 1 root root 9760768 Jun 29 08:01 containerd-shim-runc-v2*

-rwxr-xr-x 1 root root 53698737 Mar 15 13:57 crictl*

-rwxr-xr-x 1 root root 21090304 Jun 29 08:01 ctr*

-rwxr-xr-x 1 root root 47955672 Jun 29 08:01 docker*

-rwxr-xr-x 1 root root 54453847 Mar 26 14:37 docker-compose*

-rwxr-xr-x 1 root root 58246432 Jun 29 08:01 dockerd*

-rwxr-xr-x 1 root root 765808 Jun 29 08:01 docker-init*

-rwxr-xr-x 1 root root 2628514 Jun 29 08:01 docker-proxy*

-rwxr-xr-x 1 root root 23691264 Nov 21 2022 etcd*

-rwxr-xr-x 1 root root 17891328 Nov 21 2022 etcdctl*

-rwxr-xr-x 1 root root 46874624 Mar 8 21:17 helm*

-rwxr-xr-x 1 root root 21848064 Mar 15 12:03 hubble*

-rwxr-xr-x 1 root root 1815560 Jan 10 09:49 keepalived*

-rwxr-xr-x 1 root root 116572160 Apr 14 13:32 kube-apiserver*

-rwxr-xr-x 1 root root 108433408 Apr 14 13:32 kube-controller-manager*

-rwxr-xr-x 1 root root 49246208 Apr 14 13:32 kubectl*

-rwxr-xr-x 1 root root 106151936 Apr 14 13:32 kubelet*

-rwxr-xr-x 1 root root 53063680 Apr 14 13:32 kube-proxy*

-rwxr-xr-x 1 root root 54312960 Apr 14 13:32 kube-scheduler*

-rwxr-xr-x 1 root root 1806304 Jan 10 09:48 nginx*

-rwxr-xr-x 1 root root 14214624 Jun 29 08:01 runc*创建集群目录、主机文件、配置文件、

cd /etc/kubeasz/

root@ha-2:/etc/kubeasz# ./ezctl new k8s-cluster1 #k8s-cluster1集群名称

2023-06-29 08:31:18 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-cluster1 #集群目录

2023-06-29 08:31:18 DEBUG set versions

2023-06-29 08:31:18 DEBUG cluster k8s-cluster1: files successfully created.

2023-06-29 08:31:18 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-cluster1/hosts' #主机文件路径

2023-06-29 08:31:18 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-cluster1/config.yml' #集群配置文件路径⾃定义hosts⽂件:

vim /etc/kubeasz/clusters/k8s-cluster1/hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

192.168.60.171

192.168.60.176

192.168.60.173

# master node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_master]

192.168.60.160 k8s_nodename='192.168.60.160'

192.168.60.161 k8s_nodename='192.168.60.161'

# work node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_node]

192.168.60.166 k8s_nodename='192.168.60.166'

192.168.60.167 k8s_nodename='192.168.60.167'

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.60.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

#192.168.60.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.60.250 EX_APISERVER_PORT=8443

#192.168.60.7 LB_ROLE=master EX_APISERVER_VIP=192.168.60.250 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.60.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

#支持的网络插件 默认calico

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

#kube代理的服务代理模式默认ipvs

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

#Service网络

SERVICE_CIDR="10.100.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

#Pod网络

CLUSTER_CIDR="10.200.0.0/16"

# NodePort Range

#节点端口范围

NODE_PORT_RANGE="30000-62767"

# Cluster DNS Domain

#DNS后缀 可自定义 用于内部service域名后缀解析 不对外

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory 二进制目录

bin_dir="/usr/local/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster1"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

# Default 'k8s_nodename' is empty

k8s_nodename=''

vim /etc/kubeasz/clusters/k8s-cluster1/config.yml

############################

# prepare

############################

# 可选离线安装系统软件包 (offline|online)

INSTALL_SOURCE: "online"

# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

############################

# role:deploy

############################

# default: ca签发的证书是100年有效期

CA_EXPIRY: "876000h"

#crt文件有效期是50年

CERT_EXPIRY: "438000h"

# 强制重新创建CA和其他证书,不建议设置为“true”

CHANGE_CA: false

# kubeconfig 配置参数

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

# k8s version

K8S_VER: "1.27.1"

# set unique 'k8s_nodename' for each node, if not set(default:'') ip add will be used

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character (e.g. 'example.com'),

# regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*'

K8S_NODENAME: "{%- if k8s_nodename != '' -%} \

{{ k8s_nodename|replace('_', '-')|lower }} \

{%- else -%} \

{{ inventory_hostname }} \

{%- endif -%}"

############################

# role:etcd

############################

# 设置不同的wal目录,可以避免磁盘io竞争,提高性能

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# [containerd]基础容器镜像

SANDBOX_IMAGE: "registry.cn-shanghai.aliyuncs.com/kubernetes_images_qwx/alpine:latest"

# [containerd]容器持久化存储目录

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker]容器存储目录

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker]开启Restful API

ENABLE_REMOTE_API: false

# [docker]信任的HTTP仓库

INSECURE_REG:

- "http://easzlab.io.local:5000"

- "https://{{ HARBOR_REGISTRY }}"

############################

# role:kube-master

############################

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)负载均衡IP

MASTER_CERT_HOSTS:

- "192.168.60.188"

- "k8s.easzlab.io"

#- "www.test.com"

# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# node节点最大pod 数

MAX_PODS: 200

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel]

flannel_ver: "v0.21.4"

# ------------------------------------------- calico

# [calico] IPIP隧道模式可选项有: [Always, CrossSubnet, Never],跨子网可以配置为Always与CrossSubnet(公有云建议使用always比较省事,其他的话需要修改各自公有云的网络配置,具体可以参考各个公有云说明)

# 其次CrossSubnet为隧道+BGP路由混合模式可以提升网络性能,同子网配置为Never即可.

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]设置calico 是否使用route reflectors

# 如果集群规模超过50个节点,建议启用该特性

CALICO_RR_ENABLED: false

# CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: []

# [calico]更新支持calico 版本: ["3.19", "3.23"]

calico_ver: "v3.24.5"

# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# ------------------------------------------- cilium

# [cilium]镜像版本

cilium_ver: "1.13.2"

cilium_connectivity_check: true

cilium_hubble_enabled: false

cilium_hubble_ui_enabled: false

# ------------------------------------------- kube-ovn

# [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [kube-ovn]离线镜像tar包

kube_ovn_ver: "v1.5.3"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet"

OVERLAY_TYPE: "full"

# [kube-router]NetworkPolicy 支持开关

FIREWALL_ENABLE: true

# [kube-router]kube-router 镜像版本

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

############################

# role:cluster-addon

############################

# coredns 自动安装

dns_install: "yes"

corednsVer: "1.9.3"

ENABLE_LOCAL_DNS_CACHE: true

dnsNodeCacheVer: "1.22.20"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# metric server 自动安装

metricsserver_install: "no"

metricsVer: "v0.6.3"

# dashboard 自动安装

dashboard_install: "no"

dashboardVer: "v2.7.0"

dashboardMetricsScraperVer: "v1.0.8"

# prometheus 自动安装

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "45.23.0"

# nfs-provisioner 自动安装

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

# network-check 自动安装

network_check_enabled: false

network_check_schedule: "*/5 * * * *"

############################

# role:harbor

############################

# harbor version,完整版本号

HARBOR_VER: "v2.6.4"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_PATH: /var/data

HARBOR_TLS_PORT: 8443

HARBOR_REGISTRY: "{{ HARBOR_DOMAIN }}:{{ HARBOR_TLS_PORT }}"

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false #禁止自动安装harbor

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CHARTMUSEUM: true系统基础初始化主机配置

root@ha-2:/etc/kubeasz#./ezctl setup k8s-cluster1 0101是指官网安装指南中的编号,也可通过--help查看相关参数

root@ha-2:/etc/kubeasz# ./ezctl setup k8s-cluster1 --help

Usage: ezctl setup <cluster> <step>

available steps:

01 prepare to prepare CA/certs & kubeconfig & other system settings

02 etcd to setup the etcd cluster

03 container-runtime to setup the container runtime(docker or containerd)

04 kube-master to setup the master nodes

05 kube-node to setup the worker nodes

06 network to setup the network plugin

07 cluster-addon to setup other useful plugins

90 all to run 01~07 all at once

10 ex-lb to install external loadbalance for accessing k8s from outside

11 harbor to install a new harbor server or to integrate with an existed one

examples: ./ezctl setup test-k8s 01 (or ./ezctl setup test-k8s prepare)

./ezctl setup test-k8s 02 (or ./ezctl setup test-k8s etcd)

./ezctl setup test-k8s all

./ezctl setup test-k8s 04 -t restart_master部署etcd集群

root@ha-2:/etc/kubeasz#./ezctl setup k8s-cluster1 02验证etcd集群服务(etcd集群任意节点执行):

export NODE_IPS="192.168.60.171 192.168.60.173 192.168.60.176"

for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/local/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done预期结果:

https://192.168.60.171:2379 is healthy: successfully committed proposal: took = 21.848384ms

https://192.168.60.173:2379 is healthy: successfully committed proposal: took = 22.883956ms

https://192.168.60.176:2379 is healthy: successfully committed proposal: took = 20.277471ms部署容器运行时containerd:

root@ha-2:/etc/kubeasz#./ezctl setup k8s-cluster1 03验证运行时containerd是否安装成功:

root@k8s-master-1:~# /opt/kube/bin/containerd -v

root@k8s-master-1:~# /opt/kube/bin/crictl -v部署kubernetes master节点:

root@ha-2:/etc/kubeasz#vim roles/kube-master/tasks/main.yml #可自定义配置

root@ha-2:/etc/kubeasz# ./ezctl setup k8s-cluster1 04验证验证master节点是否部署成功:

root@ha-2:/etc/kubeasz#kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.60.160 Ready,SchedulingDisabled master 76s v1.26.4

192.168.60.161 Ready,SchedulingDisabled master 76s v1.26.4部署kubernetes node节点:

root@ha-2:/etc/kubeasz#vim roles/kube-node/tasks/main.yml #可自定义配置

root@ha-2:/etc/kubeasz# ./ezctl setup k8s-cluster1 05验证验证node节点是否部署成功:

root@ha-2:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.60.160 Ready,SchedulingDisabled master 8m37s v1.26.4

192.168.60.161 Ready,SchedulingDisabled master 8m37s v1.26.4

192.168.60.166 Ready node 11s v1.26.4

192.168.60.167 Ready node 11s v1.26.4部署网络服务calico:

部署网络服务calico之前可以将calico image地址修改成内网或者国内镜像仓库的地址以便快速pull image:

root@ha-2:/etc/kubeasz# grep "image:" roles/calico/templates/calico-v3.24.yaml.j2

image: easzlab.io.local:5000/calico/cni:{{ calico_ver }}

image: easzlab.io.local:5000/calico/node:{{ calico_ver }}

image: easzlab.io.local:5000/calico/node:{{ calico_ver }}

image: easzlab.io.local:5000/calico/kube-controllers:{{ calico_ver }}将以上image地址改为内网镜像仓库或者国内镜像仓库地址。

root@ha-2:/etc/kubeasz#./ezctl setup k8s-cluster1 06验证calico是否部署成功:

在master节点执行:

[root@k8s-master1 ~]/opt/kube/bin/calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+-------------------+-------+----------+-------------+

| 192.168.60.161 | node-to-node mesh | up | 16:00:29 | Established |

| 192.168.60.166 | node-to-node mesh | up | 16:00:40 | Established |

| 192.168.60.167 | node-to-node mesh | up | 16:00:29 | Established |

+----------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.在node节点执行:

[root@k8s-node1 ~]#/opt/kube/bin/calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+-------------------+-------+----------+-------------+

| 192.168.60.160 | node-to-node mesh | up | 16:00:29 | Established |

| 192.168.60.161 | node-to-node mesh | up | 16:00:29 | Established |

| 192.168.60.167 | node-to-node mesh | up | 16:00:29 | Established |

+----------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.验证Pod通信:

root@ha-2:~# kubectl run net-test1 --image=alpine sleep 360000

pod/net-test1 created

root@ha-2:~# kubectl run net-test2 --image=alpine sleep 360000

pod/net-test1 created

root@ha-2:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 0 36s 10.200.169.129 192.168.60.167 <none> <none>

net-test2 1/1 Running 0 23s 10.200.107.193 192.168.60.168 <none> <none>使用 net-test1 去ping net-test2

root@ha-2:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 0 4m14s 10.200.169.129 192.168.60.167 <none> <none>

net-test2 1/1 Running 0 4m1s 10.200.107.193 192.168.60.168 <none> <none>

root@ha-2:~# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping 10.200.107.193

PING 10.200.107.193 (10.200.107.193): 56 data bytes

64 bytes from 10.200.107.193: seq=0 ttl=62 time=0.605 ms

^C

--- 10.200.107.193 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.605/0.764/0.939 ms

/ # ping 114.114.114.114

PING 114.114.114.114 (114.114.114.114): 56 data bytes

64 bytes from 114.114.114.114: seq=0 ttl=90 time=16.884 ms

^C

--- 114.114.114.114 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 15.954/16.450/16.884 ms因没配置CoreDNS ping 域名是没有响应的;

/ # ping baidu.com

^C集群节点伸缩管理:

添加master节点:

root@ha-2:/etc/kubeasz#./ezctl add-master k8s-cluster1 192.168.60.162验证master是否添加成功:

root@ha-2:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.60.160 Ready,SchedulingDisabled master 22m v1.26.4

192.168.60.161 Ready,SchedulingDisabled master 22m v1.26.4

192.168.60.162 Ready,SchedulingDisabled master 54s v1.26.4

192.168.60.166 Ready node 21m v1.26.4

192.168.60.167 Ready node 21m v1.26.4验证CNI:

root@k8s-master1:~# /opt/kube/bin/calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+-------------------+-------+----------+-------------+

| 192.168.60.161 | node-to-node mesh | up | 16:00:29 | Established |

| 192.168.60.162 | node-to-node mesh | up | 16:00:29 | Established |

| 192.168.60.166 | node-to-node mesh | up | 16:00:40 | Established |

| 192.168.60.167 | node-to-node mesh | up | 16:00:29 | Established |

+----------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.添加node节点:

root@ha-2:/etc/kubeasz#./ezctl add-node k8s-cluster1 192.168.60.168验证node是否添加成功:

root@ha-2:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.60.160 Ready,SchedulingDisabled master 18m v1.26.4

192.168.60.161 Ready,SchedulingDisabled master 18m v1.26.4

192.168.60.162 Ready,SchedulingDisabled master 54s v1.26.4

192.168.60.166 Ready node 17m v1.26.4

192.168.60.167 Ready node 17m v1.26.4

192.168.60.168 Ready node 70s v1.26.4验证CNI:

root@k8s-master1:~# /opt/kube/bin/calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+-------------------+-------+----------+-------------+

| 192.168.60.161 | node-to-node mesh | up | 16:00:29 | Established |

| 192.168.60.162 | node-to-node mesh | up | 16:00:29 | Established |

| 192.168.60.166 | node-to-node mesh | up | 16:00:40 | Established |

| 192.168.60.167 | node-to-node mesh | up | 16:00:29 | Established |

| 192.168.60.168 | node-to-node mesh | up | 16:00:51 | Established |

+----------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.三、升级集群:

有两种方式升级集群:

一、使用kubeasz升级集群

二、手动升级集群3.1、使用kubeasz升级集群

3.1.1、下载二进制文件

下载高于现有集群的二进制文件,并把相关文件放到/etc/kubeasz/bin目录下,建议先将旧的备份,然后再进行覆盖,此处采用是用ezdown下载二进制文件:

wget https://github.com/easzlab/kubeasz/releases/download/3.6.1/ezdown

chmod +x ezdown

./ezdown -D

cd /etc/kubeasz/bin

tar zcvf k8s_v1_27_2.tar.gz kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler3.1.2、备份和替换二进制文件:

mkdir -p /usr/local/src/{k8s_v1.27.2,k8s_v1.26.4}

cd /usr/local/src/k8s_v1.27.2 && tar zxvf k8s_v1_27_2.tar.gz

cd /etc/kubeasz/bin && mv kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler /usr/local/src/k8s_v1.26.4

验证下载的二进制文件的版本是否为v1.27.2:

./usr/local/src/k8s_v1.27.2/kube-apiserver --version

Kubernetes v1.27.2

cp /usr/local/src/k8s_v1.27.2/* /etc/kubeasz/bin3.1.3、升级集群:

./ezctl upgrade k8s-cluster1

3.1.4、验证是否升级成功:

root@ha-2:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.60.160 Ready,SchedulingDisabled master 15d v1.27.2

192.168.60.161 Ready,SchedulingDisabled master 15d v1.27.2

192.168.60.162 Ready,SchedulingDisabled master 15d v1.27.2

192.168.60.166 Ready node 15d v1.27.2

192.168.60.167 Ready node 15d v1.27.2

192.168.60.168 Ready node 15d v1.27.2

3.2、手动更新:

3.2.1升级master节点:

在所有master上执行:

systemctl stop kube-apiserver kube-controller-manager kubelet kube-proxy kube-scheduler在部署节点执行:

scp /usr/local/src/k8s_v1.27.2/{kube-apiserver,kube-controller-manager,kubelet,kube-proxy,kube-scheduler} 192.168.60.160:/opt/kube/bin/

scp /usr/local/src/k8s_v1.27.2/{kube-apiserver,kube-controller-manager,kubelet,kube-proxy,kube-scheduler} 192.168.60.161:/opt/kube/bin/

scp /usr/local/src/k8s_v1.27.2/{kube-apiserver,kube-controller-manager,kubelet,kube-proxy,kube-scheduler} 192.168.60.162:/opt/kube/bin/在所有master上执行:

systemctl start kube-apiserver kube-controller-manager kubelet kube-proxy kube-scheduler

3.2.2升级node节点:

在所有node节点执行:

systemctl stop kubelet kube-proxy在部署节点执行:

scp /usr/local/src/k8s_v1.27.2/{kubelet,kube-proxy} 192.168.60.166:/opt/kube/bin/

scp /usr/local/src/k8s_v1.27.2/{kubelet,kube-proxy} 192.168.60.167:/opt/kube/bin/

scp /usr/local/src/k8s_v1.27.2/{kubelet,kube-proxy} 192.168.60.168:/opt/kube/bin/在所有node节点执行:

systemctl start kubelet kube-proxy验证是否升级成功:

root@ha-2:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.60.160 Ready,SchedulingDisabled master 15d v1.27.2

192.168.60.161 Ready,SchedulingDisabled master 15d v1.27.2

192.168.60.162 Ready,SchedulingDisabled master 15d v1.27.2

192.168.60.166 Ready node 15d v1.27.2

192.168.60.167 Ready node 15d v1.27.2

192.168.60.168 Ready node 15d v1.27.2四、部署kubernetes 内部域名解析服务-CoredDNS:

目前常用的dns组件有kube-dns和coredns两个,到k8s版本 1.17.X都可以使用Kube-dns和Coredns用于解析k8s集群中service name所对应得到IP地址,从kubernetes v1.18开始不支持使用kube-dns。

部署Coredns:

4.1、部署清单文件:

https://github.com/coredns/deployment/tree/master/kubernetes

# __MACHINE_GENERATED_WARNING__

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

#forward . /etc/resolv.conf {

forward . 223.6.6.6 {

max_concurrent 1000

}

cache 600

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

replicas: 2

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

priorityClassName: system-cluster-critical

serviceAccountName: coredns

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

containers:

- name: coredns

image: coredns/coredns:1.9.4

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 256Mi

cpu: 200m

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.100.0.2 #此处地址应在初始化集群时规划的Service地址网段中的一个

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP4.2构建DNS Pod

kubectl apply -f your-yamlfilename.yaml4.3、修改个节点kubelet配置文件中DNS配置项:

cat /var/lib/kubelet/config.yaml

clusterDNS:

- 10.100.0.2把clusterDNS地址改为 CoreDNS的Service地址

root@k8s-master-1:~# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.100.0.2 <none> 53/UDP,53/TCP,9153/TCP 20m

kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 26d测试能否连通外部域名:

kubectl run net-test --image=alpine sleep 360000

kubectl exec -it net-test sh

/ # ping baidu.com

PING baidu.com (110.242.68.66): 56 data bytes

64 bytes from 110.242.68.66: seq=0 ttl=52 time=25.115 ms

64 bytes from 110.242.68.66: seq=1 ttl=52 time=25.380 ms

64 bytes from 110.242.68.66: seq=2 ttl=52 time=25.212 ms五、部署Dashboard:

5.1、kubernetes 官方Dashboard地址:

https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30000

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

type: kubernetes.io/service-account-token

metadata:

name: dashboard-admin-user

namespace: kubernetes-dashboard

annotations:

kubernetes.io/service-account.name: "admin-user"

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.7.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

- --token-ttl=3600

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.8

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}5.2、构建官方Dashboard

kubectl apply -f your-yamlfilename.yaml5.3、验证是否部署成功:

配置转发HA服务:

在/etc/haproxy/haproxy.cfg中追加以下文件:

listen k8s-dashboard-80

bind 192.168.60.188:30000

mode tcp

server 192.168.60.160 192.168.60.160:30000 check inter 2000 fall 3 rise 5

server 192.168.60.161 192.168.60.161:30000 check inter 2000 fall 3 rise 5

server 192.168.60.162 192.168.60.162:30000 check inter 2000 fall 3 rise 5重启服务使配置生效:

systemctl restart haproxy5.4、登录Dashboard

访问192.168.60.188:30000登录Dashboard

登录Dashboard的方式有两张分别是:

基于Token登录

基于kubeconfig文件登录5.4.1、基于Token登录

1、获取token登录Dashboard所需token:

root@ha-2:~# kubectl get secret -n kubernetes-dashboard dashboard-admin-user -o jsonpath={.data.token} | base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6IkZlNXA1VkxncEJsUGZ1YzEtYUY2UGllSDMxTi11eEdBQ0NHQWM1akhsNlkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdXNlciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiN2UwMjk5OWUtY2FiNC00YWQyLWE0NjctMGFkNjNlMDY5MmVkIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmFkbWluLXVzZXIifQ.Q9qMzebmlJHMBvgqAkFt5du-zqE50nC2EsJC1VzZFSaQv_NlUAnuTwa2DpvT4MvWcaX7etiG2Dy-qiaDO1DqlqEcU24UebgEqqYREkJyIm_UQau5o1mfDUs4UyRIK6uvr6q7f7LbmwnLuQ13OkuObE_0CbNnz0eqb34A-_by1F6BaiGCotMj6AjSAtKM-4xAeeK9Y2fqvLQQCCOzqwNcd8BgPoFMgb-XIBFaMm4LlEoWJdzUxi-VGBqxPiYfW_YZYqNGZknwnlN9n_xkRg58Ktj9rjHEi5vSi_Pt35oMG2UW0d3-sRpAH_nhCcWK3uZl9khv4T8KPGvvAGioXDGZwQ2、浏览器打开Dashboard登录界面

3、选择token登录,并把获取的token信息粘贴到token登录的下方,单击登录

5.3.2依赖kubeconfig文件登录

可直接使用master中/root/.kube/config进行登录

1、复制/root/.kube/config文件至tmp

cp .kube/config /tmp/kubeconfig2、添加token至kubeconfig文件

root@ha-2:~# cat /tmp/kubeconfig

apiVersion: v1

clusters:

- cluster:

server: ""

name: ./kubeconfig.conf

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURtakNDQW9LZ0F3SUJBZ0lVUVBLUTBNNDFmdUx1UUd5ZnFFWXFGZ20vZ0hzd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEZqQVVCZ05WQkFNVERXdDFZbVZ5CmJtVjBaWE10WTJFd0lCY05Nak13TnpJNE1USXlNVEF3V2hnUE1qRXlNekEzTURReE1qSXhNREJhTUdReEN6QUoKQmdOVkJBWVRBa05PTVJFd0R3WURWUVFJRXdoSVlXNW5XbWh2ZFRFTE1Ba0dBMVVFQnhNQ1dGTXhEREFLQmdOVgpCQW9UQTJzNGN6RVBNQTBHQTFVRUN4TUdVM2x6ZEdWdE1SWXdGQVlEVlFRREV3MXJkV0psY201bGRHVnpMV05oCk1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBcE9XdDd5ekh6S0F6K1hhQlRodEoKbUtRT0Q3WnJTWnIyUElWVVBJY2VGaG5BNE9FMUtIUSsyM0p2UXZLYzkzeHdTOXBPMEg0K2tkZmhoeXdkR3kzaApqZnRjeEJDc1RKUVhnVFdTWmdySndOZnZvTUlvS29UcTJoNVRMeGkzdmRjak1vdi9STk4rSnlVMjFHR2dqL0FqCnorTmM0cjVDVXVka2t5Rk1EYVBWVW1iYlVFSm5IOWIxT2tzOG9JKzZrTm9kdlIyTU9RRGs0dGFpVU95SmUzMzIKOWR5eVlycjkxaHp2VUJ6ZWswLzRJb0VpV0pZZHNDSGc0Uld4a0FsYkF3Z2dPcHVzNzNHN2lOVHdsRmREOER6YQpqT1I3Skt6OGxudDhYWHA3dlJZdVJFdWNLTzVqd1FqM0gwOHBCWXhwbGZ0RVJGRlV0S05VczJjeUNJMnZPbGltCjN3SURBUUFCbzBJd1FEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0R3WURWUjBUQVFIL0JBVXdBd0VCL3pBZEJnTlYKSFE0RUZnUVVnOXhqdnl5ZVhpV0VCb3o3WTlER3dXSm5wdmd3RFFZSktvWklodmNOQVFFTEJRQURnZ0VCQUJkRAo3VXR4NGEwZExXeFNmYjRubjVPR3RYTTlhRVJCUHNRTU5TUnRyLzlxNUkxQkY0S2pvdUtZb3RkcEF5WDI4ZTdtCnJmZXBRRUdpVWFPYk9WOEN3bUV1TVBObUlXdzlvbWhwcVJ5UHlPSjRnVmt0QUk5R2FmbktTKzNLQ1RLVEVOcE4KSWwwSlh1bytQSzBTWFk5Y0srZG5GWk9HRHJkdUFnbHpxdTFJOVYrR2JXbnpvYlFENElLc0V1TG1TTzcvVVVENwpiWnNteDk5a1ZxbStuZ1gxNU44Z2xsTFhydUJBbkVpQWx2QVNSNG9LQTZaMWJiQmp1RWZnYXpPZURBYlcxN3QxCjQ5T2cvVVZ0L2o2OVRRUERUY1ZhRkFYM3NVajlXVitBV0JFQkFnTXJVREQ1eSs5dHQ2S2k0ZndLQUpkQUdpYTkKMkEycjRRVGhZNGxweERHdlNSQT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.60.188:6443

name: cluster1

contexts:

- context:

cluster: cluster1

user: admin

name: context-cluster1

current-context: context-cluster1

kind: Config

preferences: {}

users:

- name: admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQyakNDQXNLZ0F3SUJBZ0lVYncySTlSNjFWVGMvQ2tiSC9DaVNVVzFTM3RBd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEZqQVVCZ05WQkFNVERXdDFZbVZ5CmJtVjBaWE10WTJFd0lCY05Nak13TnpJNE1USXlNVEF3V2hnUE1qQTNNekEzTVRVeE1qSXhNREJhTUdjeEN6QUoKQmdOVkJBWVRBa05PTVJFd0R3WURWUVFJRXdoSVlXNW5XbWh2ZFRFTE1Ba0dBMVVFQnhNQ1dGTXhGekFWQmdOVgpCQW9URG5ONWMzUmxiVHB0WVhOMFpYSnpNUTh3RFFZRFZRUUxFd1pUZVhOMFpXMHhEakFNQmdOVkJBTVRCV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXdWdENoQllDMEl3RWIrV3IKazRzaXlZc0VsNDlVaWV0dU12SUNRWWk3NVBzSlpKOFJmS1dUSGs4d2pkL2VJMlQ4bHNSRUIvRHJMNmFIZmJCWQpOcDE0RGVjcm11WnlORXBuUEZNeW1ac1pzdkZQUno5TUo4TTBGWXprS215cmkyK0duNlFDaU5PbWNZOE5qc0pxClRvVlBpb0RlcXFkZW5uNWxjQ0xHSDB5Nnk1ZlE2Z3RCbHZKNDdiT3pTMEJmT3pFcFhYZDhvRmN2cmxtc1BoakMKMjl6eE0wWXl1U0RvMktHaVY2NjRBWlVDOWwzQlZ1aE5nNlQrZUxUTXB0NlcwRFRpWVdzRVlSWnhZNmVhNUk0RgozQkJBQjAvM0dWTlhNUEdRM0V6bFROMlY4d1FoSWlyVWt4b3owN2hxeTd4bFByZkR5VE9aQWlKVWtFeTloOUt2CmZQdEozUUlEQVFBQm8zOHdmVEFPQmdOVkhROEJBZjhFQkFNQ0JhQXdIUVlEVlIwbEJCWXdGQVlJS3dZQkJRVUgKQXdFR0NDc0dBUVVGQndNQ01Bd0dBMVVkRXdFQi93UUNNQUF3SFFZRFZSME9CQllFRkpOZittVVVqWGpkVE9KZgp0TXJlRVVQS2s3RnZNQjhHQTFVZEl3UVlNQmFBRklQY1k3OHNubDRsaEFhTSsyUFF4c0ZpWjZiNE1BMEdDU3FHClNJYjNEUUVCQ3dVQUE0SUJBUUJiaThnQXYxWkxMNFJVTVRkbVNXZXltMFV3U2hURDJLMmlNdytBZFp0bW5rYVUKTVlHREhORWxxTWtycHd0WTlmOUUxVFlqKzFXU3Zmb1pBMTZldFh4SStyZGFYcjRQSmtsQ21nREQxMVFTK3JrUgorS2x5V2hYQlc0UDQwcnhLcmdpaWd2L2tIS1M1UVVSV3FVL3ZZV29ydXdnTldnMjgyajJ6bHAwbjRCMTlRMFg3CjB6SWlUQlYyZUFmMUVMTVBRZG5IeVplQktGeWluWUE3aTNURUJlVWVwekUrQU4ydWdWbVVYWHlaYks2S3dzZFkKcUxVSDlnSDRnYVNKU2tlVGRLSXJheHp4UXNLWUdGMUlVVXN1YkJrREh1YXVLdnVjbGJPaXBJVmI1TUpzM2txRgp0SFJGUWRuVXpCVFZseXg2SXFnMTA0bnF4aUFkUldsSHhua0lCSm5UCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBd1Z0Q2hCWUMwSXdFYitXcms0c2l5WXNFbDQ5VWlldHVNdklDUVlpNzVQc0paSjhSCmZLV1RIazh3amQvZUkyVDhsc1JFQi9Eckw2YUhmYkJZTnAxNERlY3JtdVp5TkVwblBGTXltWnNac3ZGUFJ6OU0KSjhNMEZZemtLbXlyaTIrR242UUNpTk9tY1k4TmpzSnFUb1ZQaW9EZXFxZGVubjVsY0NMR0gweTZ5NWZRNmd0Qgpsdko0N2JPelMwQmZPekVwWFhkOG9GY3ZybG1zUGhqQzI5enhNMFl5dVNEbzJLR2lWNjY0QVpVQzlsM0JWdWhOCmc2VCtlTFRNcHQ2VzBEVGlZV3NFWVJaeFk2ZWE1STRGM0JCQUIwLzNHVk5YTVBHUTNFemxUTjJWOHdRaElpclUKa3hvejA3aHF5N3hsUHJmRHlUT1pBaUpVa0V5OWg5S3ZmUHRKM1FJREFRQUJBb0lCQVFDTU1uNk5SV1J2RUVjYgpWMTFMMHVPN1hPaE1lR21rd3ljWkcyN1ZVNjVoZmtBMlREd2lzKzl6VjVudUZQZDlsSGl0WE11ek1sVmxMSXNyCmNGVFY3T0dpdXc1YjkyR3hWbzE2S1IwVVVXaVYzZkJNeWJHUFZ6T0p1S21ydFRYQkdYRjBpVGdwTzhXQXEyZjYKTGk5a2xiYXh4M1VzS2NVcWlKMUdiSUJaSzRwNW1XcUtiMDBxc1VsVnBRa09ObUpHREsveU5rejgzNTRzN0s0UwpNdmU1NEhERzFadWNQNTU1bDZrc3U5MFgva2RLRkQvMTBFOFpLVDJDcUpQTlEycTFqQ3I3dEJqYWlYdVpoVCtTCkZtNll0bENHc21UOXFYOGJjdGtJOGV6YjhHOVllYlJ6NEZMTi9ScWFvK1JxOGhOWkZoUGZ5a1UraFhOMlV5bk0KaTJ6a0RUc2xBb0dCQVB2ZlRhbE5hNjdLVElXUGR3RHA3VXJlNDBralQ3Yy9VNTg0NThKbzVrRGgwUTlsUklsZApxN3d3TDVBZ0lhd0JaWUlWT2psOUs1TDl4NW1nRUkvRVFab2FUUGNTKzJIYlVKaWdGZ0N1MXVVZ3lKakd2Y01nCk5CVHJsTnJ1OW1TU1RxQmV0Tnl4RTcwa2RvZUJaZzAzVFgrNUF4dlYvMmJpby9xcGVhMmRxR3ViQW9HQkFNU0cKZGhNeGk0MUYwaFN4STQ0T3VmOHpqeVdSRGxRSFZhNUxzMCtGQkFDdlNyU0FIR3llYks0TjAyK1k4TXhMMGpmQgp6MzhKVlVpU0pwd0lHM3N0Q05XS0ZOSUw2N0hUVm1wdlVjM2xoN09hUnZBVGdjYWRhZ0ZKMEltbCtScXJzMEJnClpRV0JlV1liOUdLMDdEZXRYK0o5SkVxNHBtN1hMUWtPWFJnTXBDUG5Bb0dCQU5SYlBtZ3F6VXB1Wjd3SDdHYkoKMC9aWEc3d3pXR2VBcmVsRm1pbFFOaW1uK3BLSGFCU0U5R0ZUSXhiWjhHbk1ONkJJYzNHNjlmMFZtSzhPeEVmaQpTUWs3ZVg2cTgyVmErb1hrR3dqeVlGNkltSGd1d0JsKzBrcDlJV0RCTHQ0MmVMSS9oeSsyNEpTTTVKNTAyK3p5Cm5wVzhFRUhzMkV3UGMvL0gyYjRtZWJSWEFvR0FNam1mQVlhL0FJcmdodE5Dbi9LWmpHUXo2RWpySFlTR1hEWVMKakhjVkw4dWN6d2FTdlJ1NzhMdXQxcTZDaytPb3hRRXVNMnhDTkhyTmpVRHhMUWkwWWthWXpabW9VZGtPRThPQgpaNXFLbE5jUDNCbFFLRjlna1JXNVN3UjA1bUVOUFoybEU4UWtMM2xqZXJwOFNEcHg3K05GbkNjV3Vlc2FJbDllCllXQVJydThDZ1lFQTVocjBScENUSW5xeVNGZmN5RG5HYW40aTU4NmhDeGpTNGJYaDNUODZyS29DUmU5dGE2Z1QKWEc2ZlgzTlRqRUpRNTNYRFd3eWVDemovQ0pvSE5NWXFzV1JuaTFPcnZ5Vm5JaHVaU3Y4dGY3dC9VYnRGV3Yragp4dkIyMmRGcjlkWjY3TDFzSUdlMTBidUVjN0FLc0ozMFA5L0RyZHBoVUEza2ZjbnNPaElOWlVrPQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkZlNXA1VkxncEJsUGZ1YzEtYUY2UGllSDMxTi11eEdBQ0NHQWM1akhsNlkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdXNlciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiN2UwMjk5OWUtY2FiNC00YWQyLWE0NjctMGFkNjNlMDY5MmVkIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmFkbWluLXVzZXIifQ.Q9qMzebmlJHMBvgqAkFt5du-zqE50nC2EsJC1VzZFSaQv_NlUAnuTwa2DpvT4MvWcaX7etiG2Dy-qiaDO1DqlqEcU24UebgEqqYREkJyIm_UQau5o1mfDUs4UyRIK6uvr6q7f7LbmwnLuQ13OkuObE_0CbNnz0eqb34A-_by1F6BaiGCotMj6AjSAtKM-4xAeeK9Y2fqvLQQCCOzqwNcd8BgPoFMgb-XIBFaMm4LlEoWJdzUxi-VGBqxPiYfW_YZYqNGZknwnlN9n_xkRg58Ktj9rjHEi5vSi_Pt35oMG2UW0d3-sRpAH_nhCcWK3uZl9khv4T8KPGvvAGioXDGZwQ3、把/tmp/kubeconfig文件下载到本地,登录Dashboard时选择Kubeconfig登录,选择Kubeconfig文件时,选择刚才下载到本地的kubeconfig文件即可

六、部署第三方Dashboard(kuboard、KubeSphere)

6.1、在Kubernetes环境部署Kuboard

官方地址:https://kuboard.cn/overview

Github地址:https://github.com/eip-work/kuboard-press6.1.1、安装NFS并创建共享目录

root@HA-1:apt install nfs-server

root@HA-1:echo "/data/k8sdata/kuboard *(rw,no_root_squash)" /etc/exports

root@HA-1:systemctl restart nfs-server && systemctl enable nfs-server6.1.2、创建kuboard的部署文件kuboard-all-in-one.yaml

cat kuboard-all-in-one.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kuboard

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: kuboard-v3

name: kuboard-v3

namespace: kuboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/name: kuboard-v3

template:

metadata:

labels:

k8s.kuboard.cn/name: kuboard-v3

spec:

volumes:

- name: kuboard-data

nfs:

server: 192.168.60.169 #NFS-Server地址

path: /data/k8sdata/kuboard #NFS-Server中的共享目录

containers:

- env:

- name: "KUBOARD_ENDPOINT"

value: "http://kuboard-v3:80"

- name: "KUBOARD_AGENT_SERVER_TCP_PORT"

value: "10081"

image: swr.cn-east-2.myhuaweicloud.com/kuboard/kuboard:v3

volumeMounts:

- name: kuboard-data

mountPath: /data

readOnly: false

imagePullPolicy: Always

livenessProbe:

failureThreshold: 3

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: kuboard

ports:

- containerPort: 80

name: web

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 10081

name: peer

protocol: TCP

- containerPort: 10081

name: peer-u

protocol: UDP

readinessProbe:

failureThreshold: 3

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources: {}

---

apiVersion: v1

kind: Service

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: kuboard-v3

name: kuboard-v3

namespace: kuboard

spec:

ports:

- name: web

nodePort: 30080

port: 80

protocol: TCP

targetPort: 80

- name: tcp

nodePort: 30081

port: 10081

protocol: TCP

targetPort: 10081

- name: udp

nodePort: 30081

port: 10081

protocol: UDP

targetPort: 10081

selector:

k8s.kuboard.cn/name: kuboard-v3

sessionAffinity: None

type: NodePort6.1.3、构建Kuboard Pod

kubectl apply -f kuboard-all-in-one.yaml6.1.4、修改负载均衡器(ha-1&ha-2)

把下列内容追加到/etc/haproxy/haproxy.cfg

listen k8s-dashboard-30080

bind 192.168.60.188:30080

mode tcp

server 192.168.60.160 192.168.60.160:30080 check inter 2000 fall 3 rise 5

server 192.168.60.161 192.168.60.161:30080 check inter 2000 fall 3 rise 5

server 192.168.60.162 192.168.60.162:30080 check inter 2000 fall 3 rise 5重启服务使配置生效

systemctl restart haproxy6.1.5、在浏览器输入http://your-host-ip:30080 即可访问 Kuboard v3.x 的界面,默认登录名和密码:

用户名: admin

密 码: Kuboard1236.1.6、卸载kuboard:

kubectl delete -f kuboard-all-in-one.yaml6.2、在Kubernetes环境部署KubeSphere

官方地址:https://www.kubesphere.io/zh/docs/v3.3/quick-start/minimal-kubesphere-on-k8s/6.2.1、需要提前准备好默认存储类,以NFS为例:

apiVersion: v1

kind: Namespace

metadata:

name: nfs

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

#name: managed-nfs-storage

name: nfs-csi

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

reclaimPolicy: Retain #PV的删除策略,默认为delete,删除PV后立即删除NFS server的数据

mountOptions:

#- vers=4.1 #containerd有部分参数异常

#- noresvport #告知NFS客户端在重新建立网络连接时,使用新的传输控制协议源端口

- noatime #访问文件时不更新文件inode中的时间戳,高并发环境可提高性能

parameters:

#mountOptions: "vers=4.1,noresvport,noatime"

archiveOnDelete: "true" #删除pod时保留pod数据,默认为false时为不保留数据

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

spec:

replicas: 1

strategy: #部署策略

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

image: registry.cn-qingdao.aliyuncs.com/zhangshijie/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.60.169

- name: NFS_PATH

value: /data/volumes

volumes:

- name: nfs-client-root

nfs:

server: 192.168.60.169

path: /data/volumes6.2.2、验证存储类是否创建成功:

root@ha-2:~# kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-csi (default) k8s-sigs.io/nfs-subdir-external-provisioner Retain Immediate false 2m50s

6.2.3、使用官方配置文件安装Kubesphere:

root@ha-2:~#kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.3.2/kubesphere-installer.yaml

root@ha-2:~#kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.3.2/cluster-configuration.yaml

6.2.4、检查安装日志:

root@ha-2:~#kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

6.2.5、使用 kubectl get pod --all-namespaces 查看所有 Pod 是否在 KubeSphere 的相关命名空间中正常运行。如果是,请通过以下命令检查控制台的端口(默认为 30880):

root@ha-2:~#kubectl get svc/ks-console -n kubesphere-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ks-console NodePort 10.100.107.75 <none> 80:30880/TCP 2m18s6.2.6、配置负载均衡器:

把下列内容复制到/etc/haproxy/haproxy.cfg

listen k8s-dashboard-30880

bind 192.168.60.188:30880

mode tcp

server 192.168.60.160 192.168.60.160:30880 check inter 2000 fall 3 rise 5

server 192.168.60.161 192.168.60.161:30880 check inter 2000 fall 3 rise 5

server 192.168.60.162 192.168.60.162:30880 check inter 2000 fall 3 rise 5重启服务使配置生效

systemctl restart haproxy6.2.7、登录KubeSphere:

在浏览器输入负载均衡器IP:30880 即可访问KubeSphere界面,默认登录名和密码:

默认账户: admin

默认密码: P@88w0rd

仅登录用户可评论,点击 登录