为什么要收集日志:

人不可能7x24小时对系统进行人工监控,那么该如何定位功能丧失的原因呢?这时,对于系统日志来说就“是时候表演真正的技术了”。日志对于运行环境中系统的监控和问题定位是至关重要的,在系统设计、开发和实现的过程中必须时刻注意着log的输出,这将会对于日后的系统监控和异常分析起至关重要的作用!

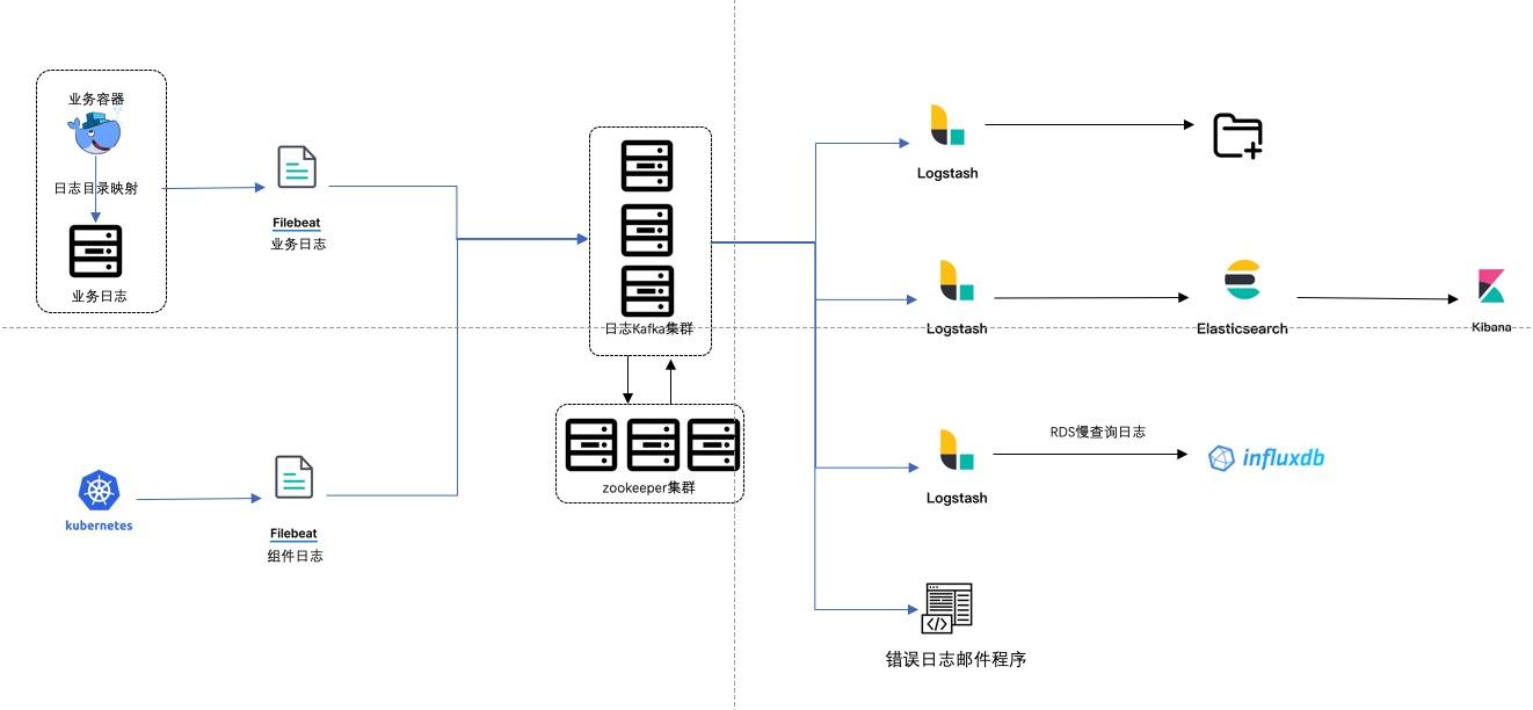

日志采集 为什么要做日志采集呢? 日志采集最大的作用,就是通过分析用户访问情况,提升系统的性能,从而提高系统承载量。日志收集简介:

日志收集的目的:

分布式日志数据统一收集, 实现集中式查询和管理

故障排查

安全信息和事件管理

报表统计及展示功能日志收集的价值:

日志查询, 问题排查, 故障恢复, 故障自愈

应用日志分析, 错误报警

性能分析, 用户行为分析

日志收集方式的三种方式:

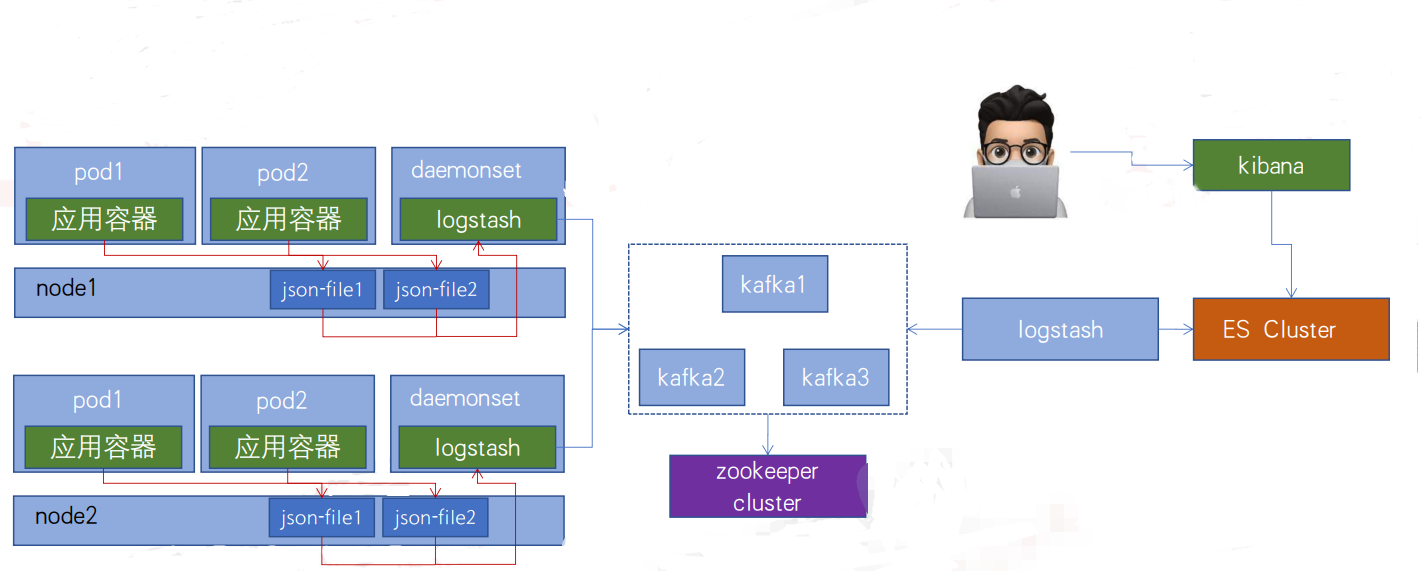

1.node节点收集, 基于Daemonset部署日志收集进程, 实现json-file类型(标准输出/dev/stdout、 错误输出/dev/stderr)日志收集。

2.使用sidcar容器(一个pod多容器)收集当前pod内一个或者多个业务容器的日志(通常基于emptyDir实现业务容器与sidcar之间的日志共享)。

3.在容器内置日志收集服务进程。示例一: daemonset收集jsonfile日志-部署web服务:

基础环境

zookeeper && kafka

elasticsearch cluster

logsatsh

kibana

kubernetes Version v1.27.2基础环境搭建:

https://shackles.cn/index.php/archives/96/

https://shackles.cn/index.php/archives/94/ 基于Daemonset运行日志收集服务, 主要收集以下类型日志:

1.node节点收集, 基于Daemonset部署日志收集进程, 实现json-file类型(标准输出/dev/stdout、 错误输出/dev/stderr)日志收集, 即应用程序产生的标准输出和错误输出的日志。

2.宿主机系统日志等以日志文件形式保存的日志。实现方式:

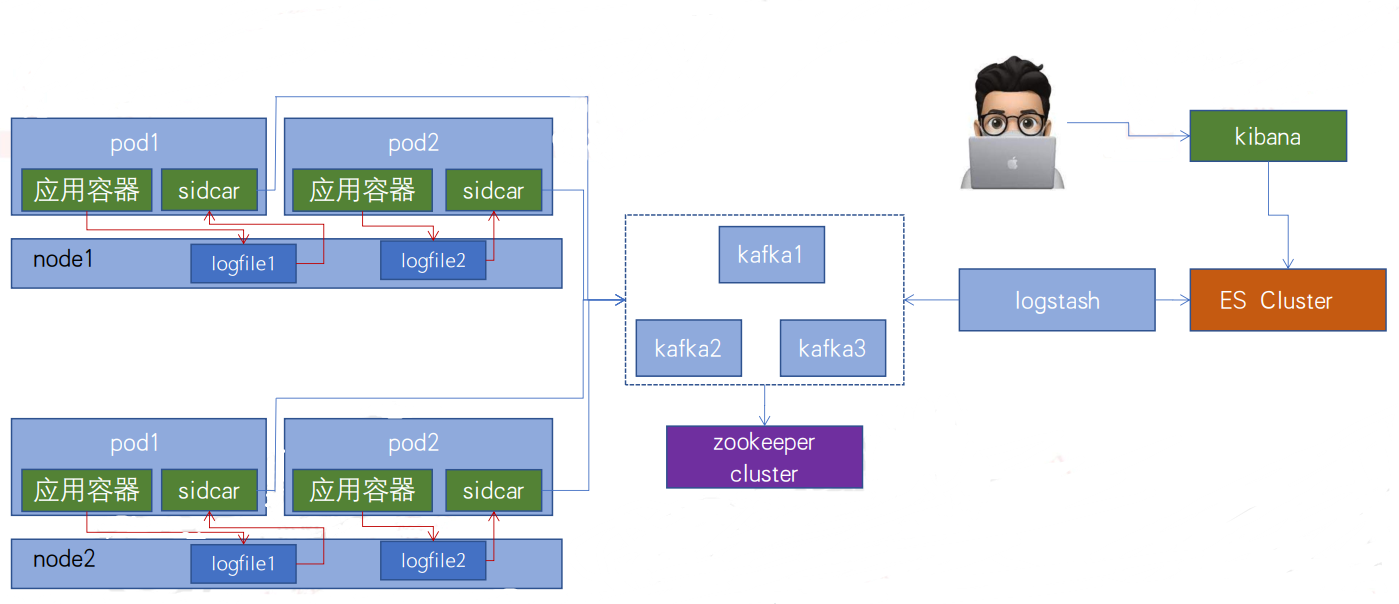

使用Daemonset在每个node节点创建一个Pod来收集node主机日志和Pod日志,在yaml文件中要把宿主机的log路径和所有的Pod日志挂载到Pod中,Daemonset容器把收集到的log输出到kafka cluster中 外部logstash消费kafka消息并存放到ES Cluster中,在kibana查看相关log信息。

收集容器和宿主机log的yaml文件

cat <<EOF> DaemonSet-logstash.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: logstash-elasticsearch

namespace: kube-system

labels:

k8s-app: logstash-logging

spec:

selector:

matchLabels:

name: logstash-elasticsearch

template:

metadata:

labels:

name: logstash-elasticsearch

spec:

tolerations:

# this toleration is to have the daemonset runnable on master nodes

# remove it if your masters can't run pods

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: logstash-elasticsearch

image: registry.cn-shanghai.aliyuncs.com/buildx/logstash:v7.12.1-json-file-log-v1

imagePullPolicy: IfNotPresent

env:

- name: "KAFKA_SERVER"

value: "192.168.60.163:9092,192.168.60.164:9092,192.168.60.165:9092" #kafka集群IP

- name: "TOPIC_ID"

value: "jsonfile-log-topic"

- name: "CODEC"

value: "json"

# resources:

# limits:

# cpu: 1000m

# memory: 1024Mi

# requests:

# cpu: 500m

# memory: 1024Mi

volumeMounts:

- name: varlog #定义宿主机系统日志挂载路径

mountPath: /var/log #宿主机系统日志挂载点

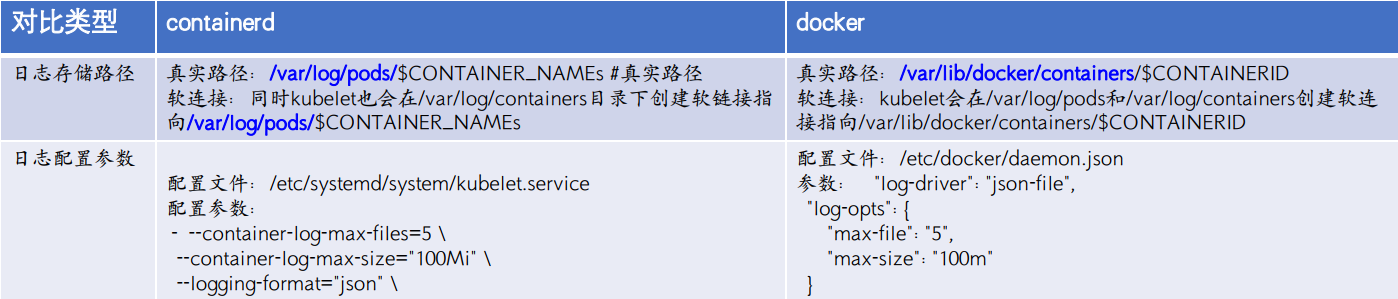

- name: varlibdockercontainers #定义容器日志挂载路径,和logstash配置文件中的收集路径保持一直

#mountPath: /var/lib/docker/containers #docker挂载路径

mountPath: /var/log/pods #containerd挂载路径,此路径与logstash的日志收集路径必须一致

readOnly: false

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log #宿主机系统日志

- name: varlibdockercontainers

hostPath:

#path: /var/lib/docker/containers #docker的宿主机日志路径

path: /var/log/pods #containerd的宿主机日志路径

EOF日志收集logstash.conf:

cat <<EOF> logstash.conf

input {

file {

#path => "/var/lib/docker/containers/*/*-json.log" #docker

path => "/var/log/pods/*/*/*.log"

start_position => "beginning"

type => "jsonfile-daemonset-applog"

}

file {

path => "/var/log/*.log"

start_position => "beginning"

type => "jsonfile-daemonset-syslog"

}

}

output {

if [type] == "jsonfile-daemonset-applog" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384 #logstash每次向ES传输的数据量大小,单位为字节

codec => "${CODEC}"

} }

if [type] == "jsonfile-daemonset-syslog" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384

codec => "${CODEC}" #系统日志不是json格式

}}

}

EOFlogstash.yml:

cat <<EOF> logstash.yml

http.host: "0.0.0.0"

#xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]

EOF安装buildkit:

软件下载地址:https://github.com/moby/buildkit/releases/download/v0.11.6/buildkit-v0.11.6.linux-amd64.tar.gz

安装 buildkit客户端和buildkitd服务;

tar zxvf buildkit-v0.11.6.linux-amd64.tar.gz && cd bin && cp buildctl buildkitd /usr/bin配置buildkitd为systemd 服务:

cat <<EOF> /usr/lib/systemd/system/buildkitd.service

[Unit]

Description=/usr/bin/buildkitd

ConditionPathExists=/usr/bin/buildkitd

After=containerd.service

[Service]

Type=simple

ExecStart=/usr/bin/buildkitd

User=root

Restart=on-failure

RestartSec=1500ms

[Install]

WantedBy=multi-user.target

EOF加入开机自启动;

systemctl daemon-reload && systemctl restart buildkitd && systemctl enable buildkitd验证安装;

root@k8s-master:~#buildctl -version

buildctl github.com/moby/buildkit v0.11.6 2951a28cd7085eb18979b1f710678623d94ed578

root@k8s-master:~#systemctl status buildkitd

构建镜像:

cat <<EOF> Dockerfile

FROM logstash:7.12.1

USER root

WORKDIR /usr/share/logstash

#将上方logstash.conf替换到镜像中

ADD logstash.yml /usr/share/logstash/config/logstash.yml

#将上方logstash.yaml替换到镜像中

ADD logstash.conf /usr/share/logstash/pipeline/logstash.conf

EOF

nerdctl build -t registry.cn-shanghai.aliyuncs.com/buildx/logstash:v7.12.1-json-file-log-v1 . --push构建Pod:

root@k8s-master:~#kubectl apply -f DaemonSet-logstash.yaml

daemonset.apps/logstash-elasticsearch created验证Pod是否runing:

root@k8s-master:~#kubectl get pod -n kube-system

logstash-elasticsearch-jwptb 1/1 Running 0 2m34s

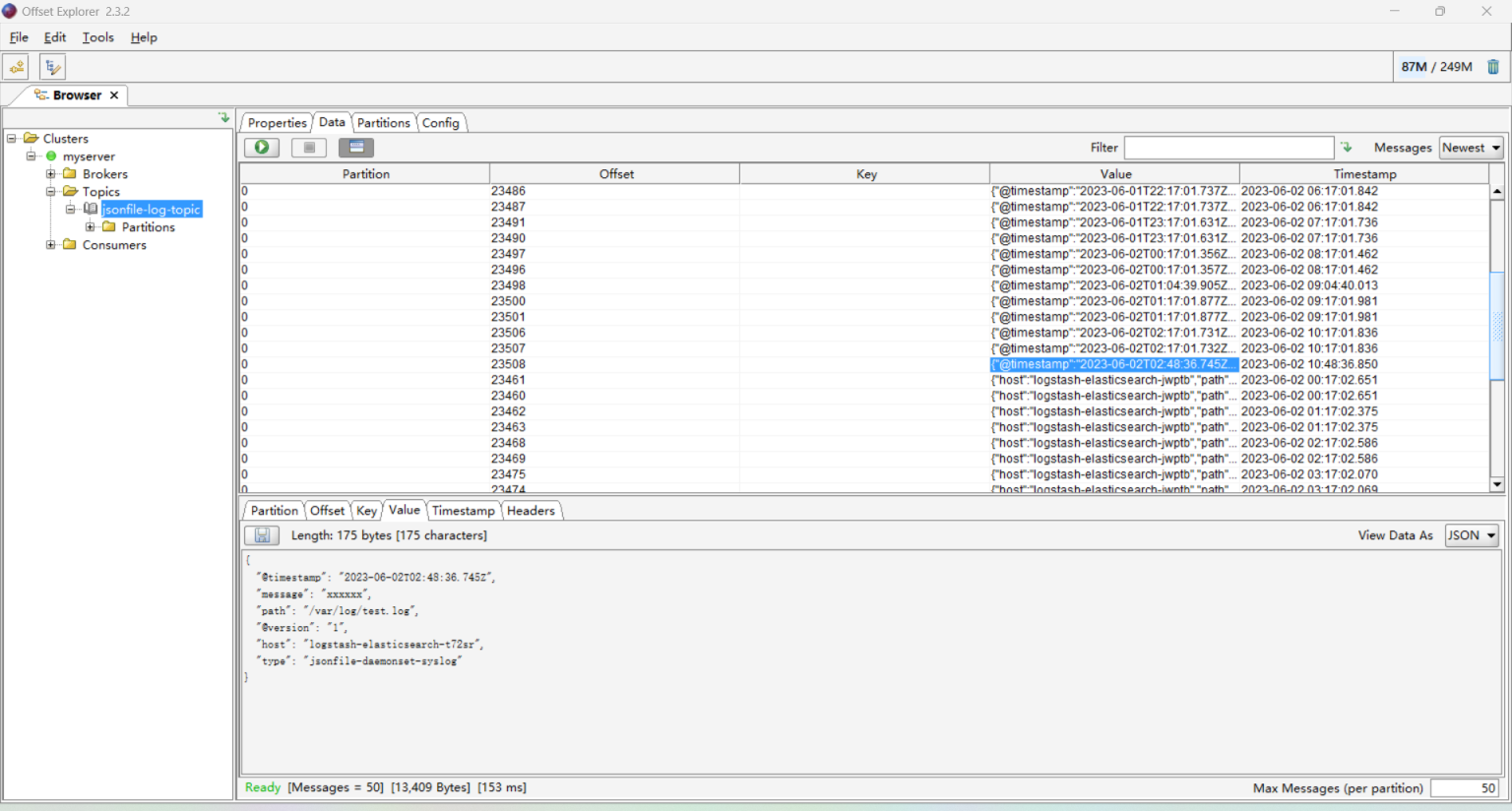

logstash-elasticsearch-t72sr 1/1 Running 0 2m44sPod runing起来后可以去kafka查看收集到的信息:

部署外部logstash:

dpkg -i logstash-7.12.1-amd64.deb添加logstash.conf文件:

cat <<EOF> /etc/logstash/conf.d/logstash.conf

input {

kafka {

bootstrap_servers => "192.168.60.163:9092,192.168.60.164:9092,192.168.60.165:9092"

topics => ["jsonfile-log-topic"]

codec => "json"

}

}

output {

#if [fields][type] == "app1-access-log" {

if [type] == "jsonfile-daemonset-applog" {

elasticsearch {

hosts => ["192.168.60.163:9200","192.168.60.164:9200","192.168.60.165:9200"]

index => "jsonfile-daemonset-applog-%{+YYYY.MM.dd}"

}}

if [type] == "jsonfile-daemonset-syslog" {

elasticsearch {

hosts => ["192.168.60.163:9200","192.168.60.164:9200","192.168.60.165:9200"]

index => "jsonfile-daemonset-syslog-%{+YYYY.MM.dd}"

}}

}

}

EOF重启logstash使配置生效

systemctl restart logstash验证配置:

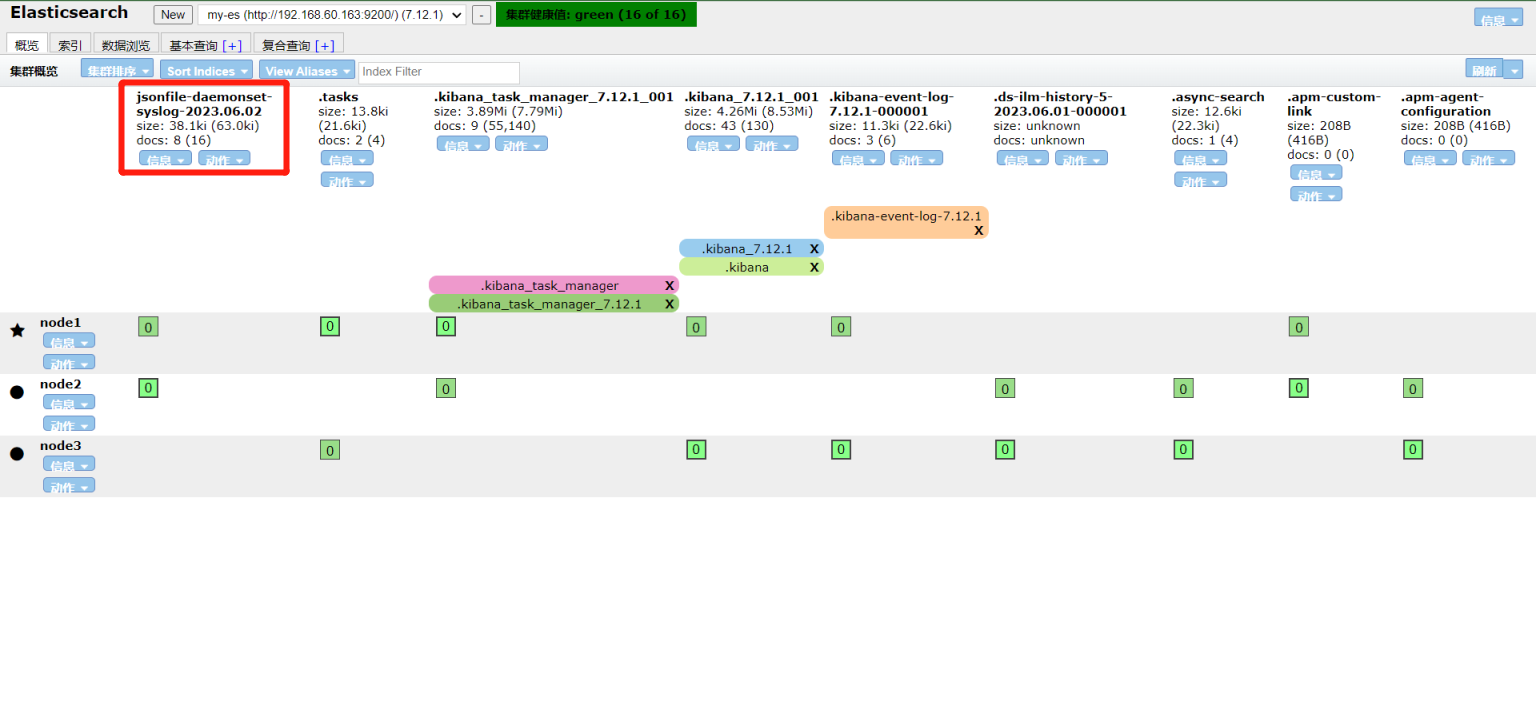

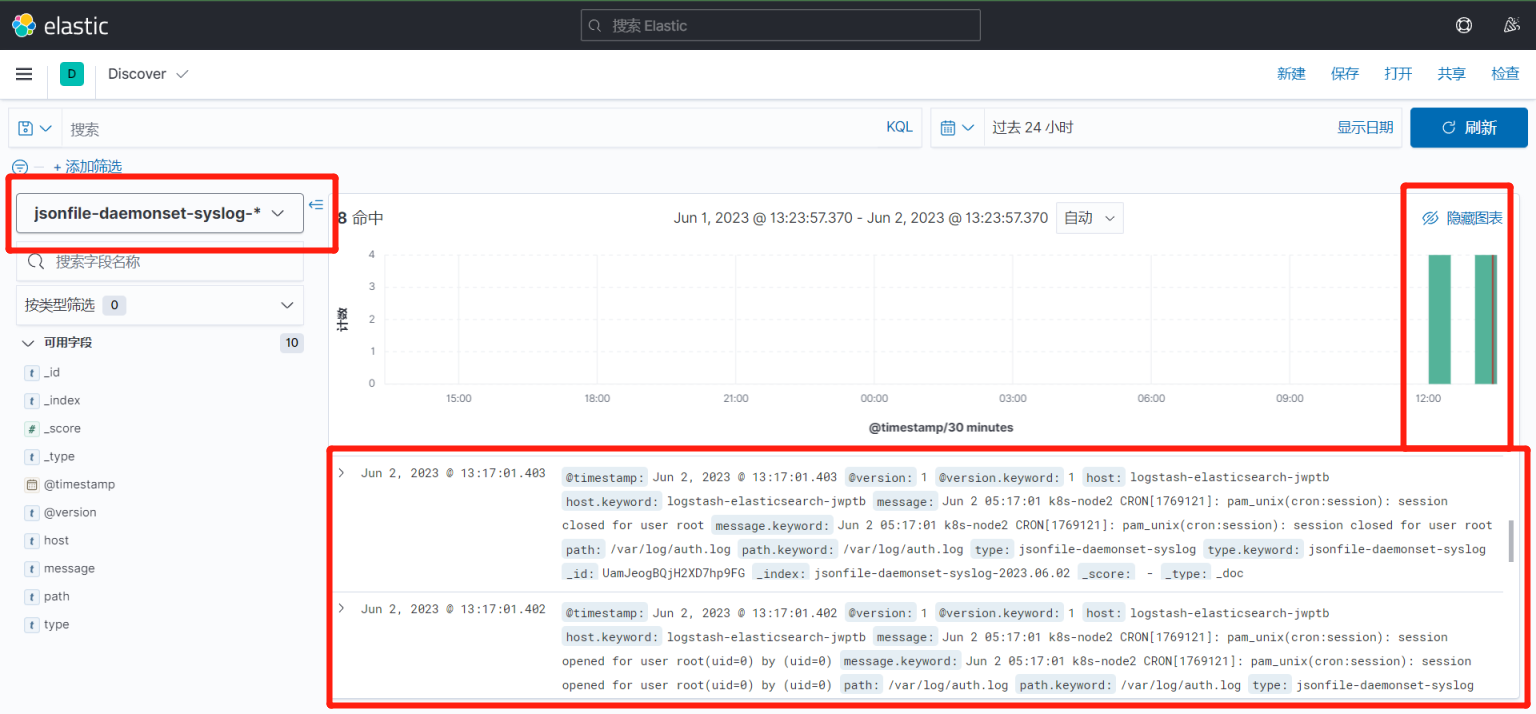

#可查看ES的索引和kibana来验证log是否收集成功

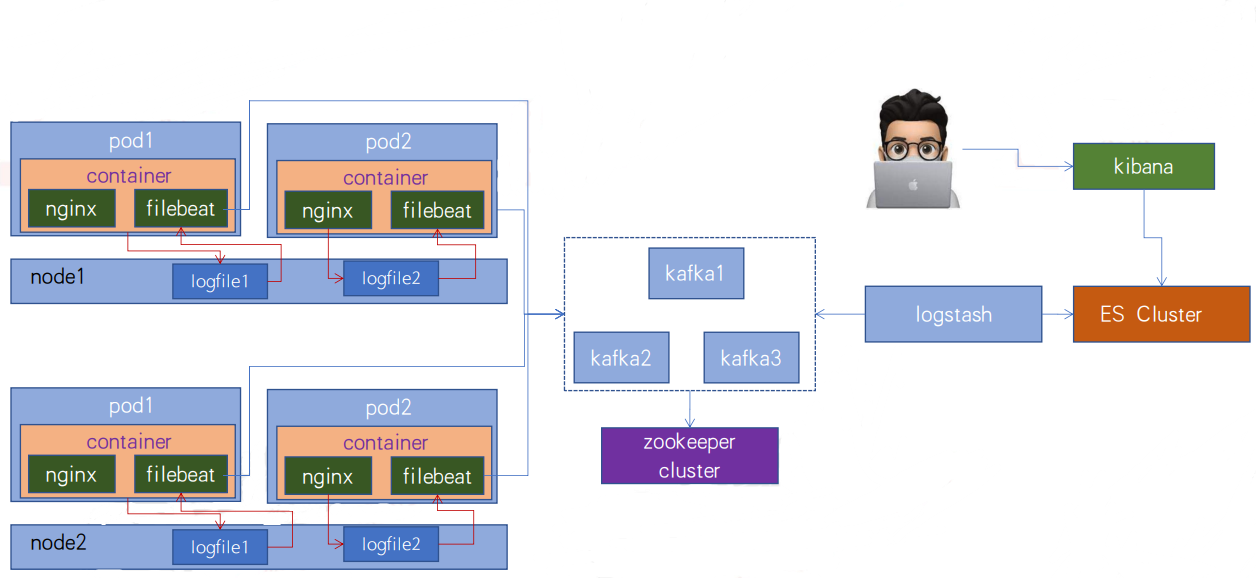

示例二: Sidecar模式实现日志收集:

在一个Pod中运行多个容器。一个容器收集当前Pod内的一个或多个业务容器的日志(通常基于emptyDir实现业务容

器与sidcar之间的日志共享)。

实现方式:

在一个Pod中运行俩容器,一个业务容器、一个sidcar容器。业务容器负责产生日志,sidcar容器负责收集日志,因为Pod中的容器文件系统是相互隔离的,所以需要做共享(emptyDir)。sidcar容器收集到log后给到kafka,容器外部的logstash消费kafka的消息存到ES,通过kibana建立索引且查看相关log。

制作Sedecar image:

Sedecar(logstash)配置文件:

cat <<EOF> logstash.conf

input {

file {

path => "/var/log/applog/catalina.out"

start_position => "beginning"

type => "app1-sidecar-catalina-log"

}

file {

path => "/var/log/applog/localhost_access_log.*.txt"

start_position => "beginning"

type => "app1-sidecar-access-log"

}

}

output {

if [type] == "app1-sidecar-catalina-log" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384 #logstash每次向ES传输的数据量大小,单位为字节

codec => "${CODEC}"

} }

if [type] == "app1-sidecar-access-log" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384

codec => "${CODEC}"

}}

}

EOFlogstash.yml文件:

cat <<EOF> logstash.yml

http.host: "0.0.0.0"

#xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]

EOFDockerfile文件

cat <<EOF> Dockerfile

FROM logstash:7.12.1

USER root

WORKDIR /usr/share/logstash

#RUN rm -rf config/logstash-sample.conf

ADD logstash.yml /usr/share/logstash/config/logstash.yml

ADD logstash.conf /usr/share/logstash/pipeline/logstash.conf

EOF构建镜像:

nerdctl build -t registry.cn-shanghai.aliyuncs.com/buildx/logstash:v7.12.1-sidecar .

可使用已经构建好的image:registry.cn-shanghai.aliyuncs.com/buildx/logstash:v7.12.1-sidecar构建Sidecar容器:

tomcatapp1 yaml配置:

cat <<EOF> tomcat-app1-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: tomcat-app1-deployment-label

name: tomcat-app1-deployment #当前版本的deployment 名称

namespace: kube-system

spec:

replicas: 2

selector:

matchLabels:

app: tomcat-app1-selector

template:

metadata:

labels:

app: tomcat-app1-selector

spec:

containers:

- name: tomcat-app1-container

image: registry.cn-shanghai.aliyuncs.com/qwx_images/tomcat-app1:v1

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

volumeMounts:

- name: applogs

mountPath: /apps/tomcat/logs

startupProbe:

httpGet:

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5 #首次检测延迟5s

failureThreshold: 3 #从成功转为失败的次数

periodSeconds: 3 #探测间隔周期

readinessProbe:

httpGet:

#path: /monitor/monitor.html

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

livenessProbe:

httpGet:

#path: /monitor/monitor.html

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

- name: sidecar-container

image: registry.cn-shanghai.aliyuncs.com/buildx/logstash:v7.12.1-sidecar

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

env:

- name: "KAFKA_SERVER"

value: "192.168.60.163:9092,192.168.60.164:9092,192.168.60.165:9092"

- name: "TOPIC_ID"

value: "tomcat-app1-topic"

- name: "CODEC"

value: "json"

volumeMounts:

- name: applogs

mountPath: /var/log/applog #要与上方Sedecar(logstash)配置文件PATH对应

volumes:

- name: applogs #定义通过emptyDir实现业务容器与sidecar容器的日志共享,以让sidecar收集业务容器中的日志

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

app: tomcat-app1-service-label

name: tomcat-app1-service

namespace: kube-system

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 40080

selector:

app: tomcat-app1-selector

EOF构建Pod:

kubectl apply -f tomcat-app1-svc.yaml准备外部logsatsh文件:

cat <<EOF> logsatsh-sidecar-kafka-to-es.conf

input {

kafka {

bootstrap_servers => "192.168.60.163:9092,192.168.60.164:9092,192.168.60.165:9092"

topics => ["tomcat-app1-topic"]

codec => "json"

}

}

output {

if [type] == "app1-sidecar-access-log" {

elasticsearch {

hosts => ["192.168.60.163:9200","192.168.60.164:9200","192.168.60.165:9200"]

index => "sidecar-app1-accesslog-%{+YYYY.MM.dd}"

}

}

if [type] == "app1-sidecar-catalina-log" {

elasticsearch {

hosts => ["192.168.60.163:9200","192.168.60.164:9200","192.168.60.165:9200"]

index => "sidecar-app1-catalinalog-%{+YYYY.MM.dd}"

}

}

}

EOF重启logsatsh使其配置生效:

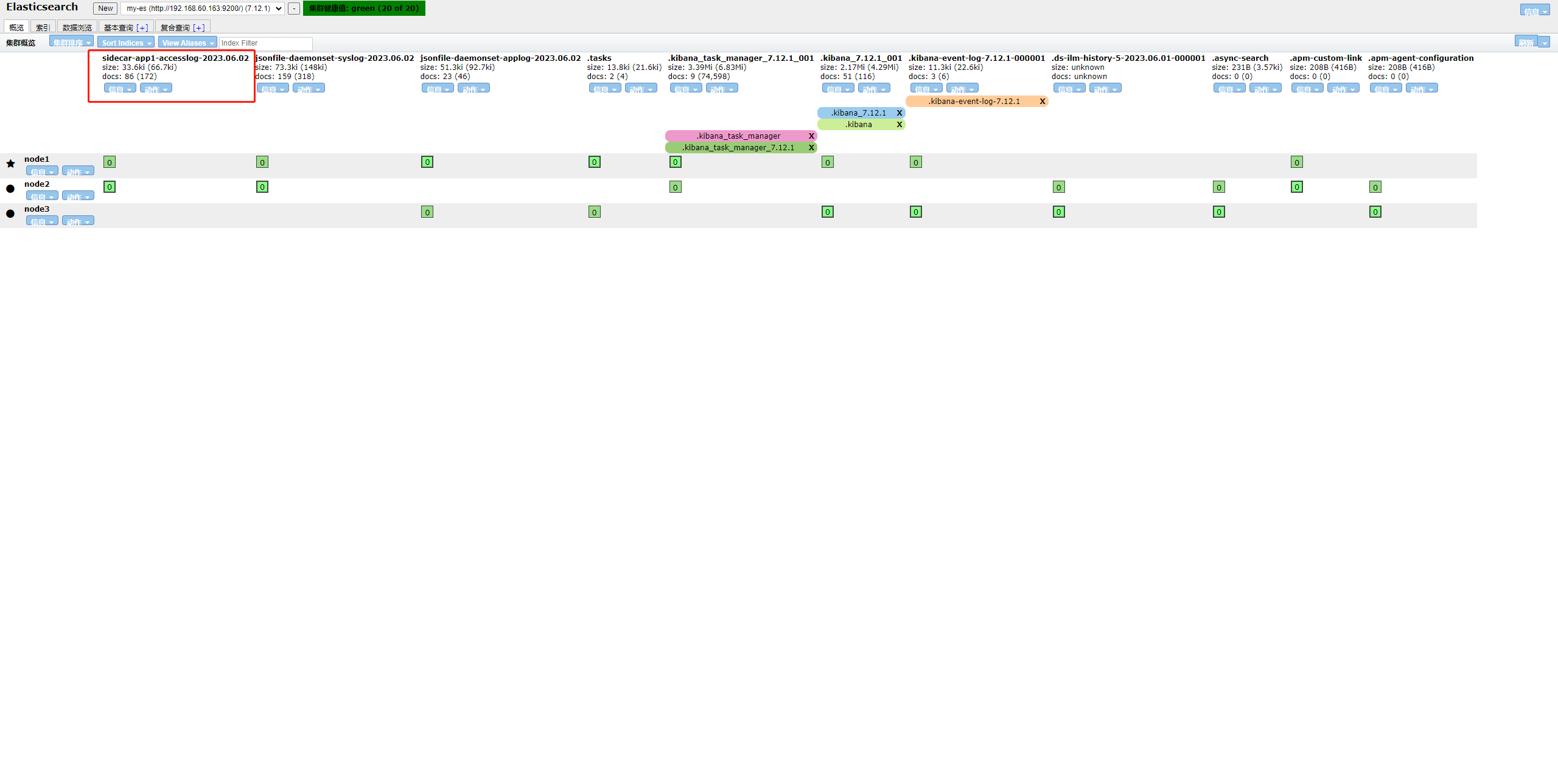

systemctl restart logsatsh验证是否收集成功:

示例三:容器内置日志收集服务进程

在容器内置日志收集服务进程,收集当前容器的业务容器日志等

实现方式:

在一个Pod中启俩进程。一个业务进程,一个收集日志进程(建议使用filebeat), 在构建image时把业务进程和filebeat构建在一起,最后使用Pod Controller部署

总结:

示例1(Ds收集jsonfile日志):

优点:

资源开销较低,易配置

缺点:

所有log堆积在一起,不易区分

示例2(Sidecar模式实现日志收集):

优点:

文件系统相互隔离,精细化服务的日志,

缺点:

相比示例1资源开销较高,需要log共享(emptyDir)

示例3(容器内置日志收集服务进程):

优点:

两个进程放到一个Pod中,俩进程共享一个文件系统,不需要挂载操作,指定目标的log路径读取目标log

缺点:

相比示例1资源开销较高

仅登录用户可评论,点击 登录